Do you work on the IS side of GIS, in ESRI geodatabases? The day may come when you are faced with culling domain values from what seems like a gazillion tables, organizing and verifying the values, and loading them into multiple domains.

Sorting through domain values by visually inspecting each column and loading domains one by one can get tiresome. It may be that you decide that the best thing to do is to learn how to script and automate much of the domain value sorting, validating and loading process.

Whether you are just starting out and want some tips, or whether you don't like scripting and just want something that works--this blog is for you.

This series of posts on domains will put you on your way. Each post describes a python script tool that can be added to an ArcGIS custom toolbox. Collectively, the tools automate the domain workflow, reduce errors, and save time.

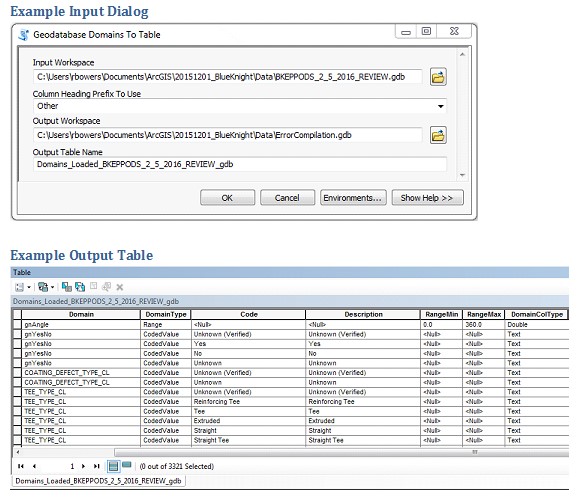

Summary Of Tool

The Export Geodatabase Domains tool exports all ESRI geodatabase domains and associated values to a table.

For

column heading prefixes, choose from: "For ETL Source", "For ETL

Target" or "Other. This will result in, for example, SourceDomain,

TargetDomain, or just Domain. Default is "Other".

Output table columns are:

Domain (domain name)

DomainType ("CodedValue" or "Range")

Code (CodedValue domains only--Code value)

Description (CodedValue domains only--Code description)

RangeMin (Range domains only--Minimum value in the range)

RangeMax (Range domains only--Maximum value in the range)

DomainColType (Domain column type)

join_field (Code and Description in lower case, concatenated with an underscore)

Code_Description (Code and Description, concatenated with an underscore)

Columns are populated as appropriate for each domain type. The last two columns are designed for quality assurance.

Use

the output from this tool for verifying and editing domain values, for

domain documentation, as well as for a domain loading table (with the Batch Domain Loading tool in this toolbox).

Illustration

Usage

There is no usage for this tool.

Syntax

GdbDomainsToTable_mytools (Input_Workspace, Column_Heading_Prefix_To_Use, Output_Workspace, Output_Table_Name)

| Parameter | Explanation | Data Type |

|---|---|---|

| Input_Workspace |

Dialog Reference Geodatabase whose domains and values you want to list ___________________ Python Reference Geodatabase whose domains and values you want to list |

Workspace |

| Column_Heading_Prefix_To_Use |

Dialog Reference Choice "For ETL Source", "For ETL Target" or "Other" - Default Other. ___________________ Python Reference Choice "For ETL Source", "For ETL Target" or "Other" - Default Other. |

String |

| Output_Workspace |

Dialog Reference Output workspace ___________________ Python Reference Output workspace |

Workspace |

| Output_Table_Name |

Dialog Reference Output table name ___________________ Python Reference Output table name |

String |

Code Samples

ExportGeodatabaseDomains.py

See below for tool source code.

import arcpy, traceback, sys, os

arcpy.env.overwriteOutput = True

def insert_rows(in_layer, field_list, rows_to_insert):

# USES DA.INSERTCURSOR TO INSERT ROWS OF DATA INTO INPUT TABLE.

# PARAMETERS: INPUT LAYER, LIST OF FIELD NAME STRINGS, LIST OF DATA ROW LISTS.

cursor = arcpy.da.InsertCursor(in_layer, field_list)

for row in rows_to_insert:

cursor.insertRow(row)

del cursor

def unicode2ascii(in_string):

'''CONVERTS A STRING WITH UNICODE CHARACTERS TO ASCII (TO USE AS STRING).

REMOVES LEFT AND RIGHT SLANTED SINGLE AND DOUBLE QUOTES AND M-DASHES'''

s = in_string

s = unicode(val).replace(u'\u2018', u"'").replace(u'\u2019', u"'").replace(u'\u2013', u'-').replace(u'\u201c', u'"').replace(u'\u201d', u'"')

s.encode('ascii')

return s

if __name__ == "__main__":

in_workspace = arcpy.GetParameterAsText(0) # WORKSPACE

prefix_chosen = arcpy.GetParameterAsText(1) # STRING, FILTER "For ETL Source",

# "For ETL Target", "Other"

out_workspace = arcpy.GetParameterAsText(2) # WORKSPACE

out_table_name = arcpy.GetParameterAsText(3) # STRING

try:

arcpy.AddMessage(" ")

# CREATE FIELD NAMES

if prefix_chosen == "For ETL Source":

n_prefix = "Source"

s_prefix = "SrcDom"

elif prefix_chosen == "For ETL Target":

n_prefix = "Target"

s_prefix = "TrgDom"

n_suffix = ""

else:

n_prefix = ""

s_prefix = ""

col_name = n_prefix + "Domain"

col_type = n_prefix + "DomainType"

col_code = n_prefix + "Code"

col_desc = n_prefix + "Description"

col_min = s_prefix + "RangeMin"

col_max = s_prefix + "RangeMax"

col_ctype = s_prefix + "DomainColType"

col_join = "join_field"

col_code_desc = "Code_Description"

arcpy.AddMessage(" Getting domain names from workspace...")

domains = arcpy.da.ListDomains(in_workspace)

arcpy.AddMessage(" Creating table...")

out_table = arcpy.CreateTable_management(out_workspace, out_table_name)

field_names = [col_name, col_type, col_code, col_desc, col_min, col_max, col_ctype, col_join, col_code_desc]

for fn in field_names:

arcpy.AddField_management(out_table, str(fn), "text", field_length=255)

arcpy.AddMessage(" Getting domain values from workspace...")

# CREATE LIST TO HOLD ROWS OF DATA TO INSERT INTO OUTPUT TABLE

rows_to_insert = []

# ITERATE DOMAINS

for domain in domains:

domain_name = domain.name

if domain.domainType == 'CodedValue':

t = []

# ITERATE DOMAIN VALUES

for val, desc in domain.codedValues.items():

# CONVERT UNICODE CHARACTERS TO ASCII TO REMOVE INVALID CHARS

asc_val = unicode2ascii(val)

asc_desc = unicode2ascii(desc)

# CREATE INSERT-ROW LIST AND APPEND TO ROWS-TO-INSERT LIST

t = [str(domain_name), str(domain.domainType), str(asc_val), str(asc_desc), None, None, str(domain.type),

str(domain_name.lower()) + "_" + str(asc_val).lower(), str(domain_name) + "_" + str(asc_val)]

rows_to_insert.append(t)

elif domain.domainType == 'Range':

t = []

# CREATE INSERT-ROW LIST FOR RANGE DOMAIN AND APPEND TO ROWS-TO-INSERT LIST

if domain.type in ("Long", "Short"):

# MAKE SURE INTEGERS COME OUT AS INTEGERS

t = [str(domain_name), str(domain.domainType), None, None, str(int(domain.range[0])), str(int(domain.range[1])),

str(domain.type), str(domain_name.lower()) + "_" + str(int(domain.range[0])) + "_" + str(int(domain.range[1])),

str(domain_name) + "_" + str(int(domain.range[0])) + "_" + str(int(domain.range[1]))]

else:

t = [str(domain_name), str(domain.domainType), None, None, str(domain.range[0]), str(domain.range[1]), str(domain.type),

str(domain_name.lower()) + "_" + str(domain.range[0]) + "_" + str(domain.range[1]),

str(domain_name) + "_" + str(domain.range[0]) + "_" + str(domain.range[1])]

rows_to_insert.append(t)

# POPULATE OUTPUT TABLE

arcpy.AddMessage(" Creating output table...")

insert_rows(out_table, field_names, rows_to_insert)

# SET OUTPUT PARAMETER

arcpy.SetParameter(4, out_table) # TABLEVIEW

arcpy.AddMessage(" ")

except:

# PRINT ERROR MESSAGES

tb = sys.exc_info()[2]

tbinfo = traceback.format_tb(tb)[0]

pymsg = tbinfo + "\n" + str(sys.exc_type)+ ": " + str(sys.exc_value)

arcpy.AddError("Python Messages: " + pymsg + " GP Messages: " + arcpy.GetMessages(2))

finally:

del in_workspace, prefix_chosen, out_workspace, out_table_name

del domains, rows_to_insert, out_table

del col_name, col_type, col_code, col_desc, col_min, col_max

Tags

geodatabase, domain, Describe, CreateTable, ListDomains, InsertCursor, AddField

Credits

Ruth Bowers, 2015, 2016.

Use limitations

ESRI geodatabases only. Tested as ArcGIS 10.2.2 python script tool in custom toolbox.